The AI trap: The technology that caused everyone to forget

The Neural Cost of Convenience

I cannot overstate the importance of protecting one’s brain health. The brain is our interpreter, visualizer, navigator, memory bank, and doorway into the world we know as reality. When the brain fails to function properly it can no longer interpret, visualize, navigate or remember correctly —resulting in the creation of a false reality. Our beliefs do not constitute truth. So if the brain is unable to operate as designed it will become beholden to what isn’t, failing to properly decipher what is true. Protecting our brain health is vital to our very existence.

Ever since cell phones became ubiquitous I’ve been concerned for my brain health. Over the last two decades I’ve noticed a change in the way that we as humans process, store, and recall information. The changes started slowly with the advent of the smart phone which led us all down the path of loading contacts into shiny devices tucked away in our pockets. While this removed the need to memorize crucial phone numbers it also left us with a problem —the struggle to remember phone numbers when desired.

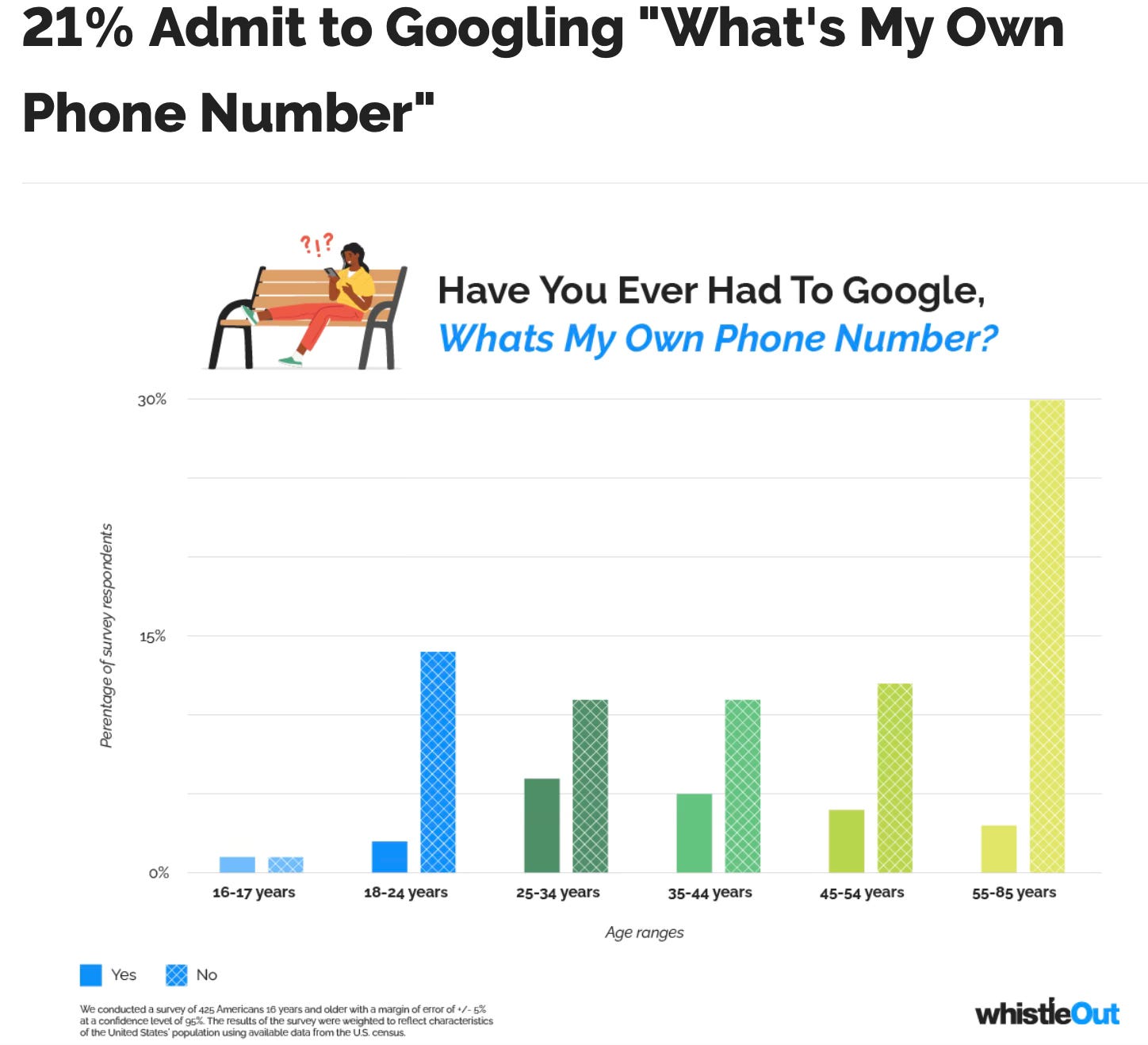

A 2020 Panda Security survey dubbed this phenomena "Digital amnesia," –the effect of a technology dependence that inhibits one's ability to remember things. In fact, this “amnesia” effect is so bad that over 20% of mobile phone users have to Google their own phone number because they can’t remember it.

According to the latest data from whistleOut about 50% of mobile users only have 2-5 numbers committed to memory —with those over the age of 55 remembering the most numbers. This is most likely due to the younger generation’s overreliance of mobile technology.

This is just one simple example of how technology is used to offload important information. Another clear example would be the overreliance of global positioning systems —also known as GPS. There was a time when we relied on our built-in wayfinding to locate a new destination instead of GPS. Sometimes this included the use of travel maps. I still remember the days when my family would take cross-country trips. My dad would pull out his latest giant map to plot our path ahead of time, overlaying the highways and interstates with bright highlighted markings.

By the time I started to drive —around the late 1990s— an internet mapping service known as MapQuest was becoming popular. MapQuest used satellite imagery which allowed users to print directions to a destination from their website. It was an amazing technology at the time —as long as you remembered to print out the directions before hand and as long as the roads hadn’t changed since the last satellite image was taken. However, this technology provided an opportunity for the cognitive offloading of very important information and the very necessary skill of being able to spatially orient oneself within an environment.

A few years later and with the advent of smart mobile devices and enhanced GPS technologies, users began to put their minds on cruise control and wait for the audible instructions to tell them where to turn. Some navigation apps such as Waze took it up a notch. Waze went as far as warning you of nearby police that were camping along the interstate just waiting for speeders. This allowed for even more cognitive offloading as it alleviated drivers from scanning the roads for police while closely watching their speed. I remember thinking this technology was a godsend as I was a heavy commuter with a lead foot at the time. Due to what I’ve learned while working in tech I no longer share such sentiment.

I started my tech career in 1999 and over the last two decades I’ve watched society morph into what appears to be several steps back for humanity —increasing our reliance on technology. Regrettably, I was part of the social-tech revolution that polluted the internet with social check-in apps, replete with gamification and GPS tracking. The explosion of social media, the endless stream of content in an “always-on” and “accessible everywhere” service world has caused a fundamental shift in how we all approach life as we’re constantly glued to our screens. When we look at how children are being trained up in today’s world this societal change couldn’t be more obvious. I am one of the many tech sages that greatly minimize my kid’s access to the internet. Most people have zero idea as to how technology and social applications in particular are reshaping the brain. Everything is built on human psychology.

A National Trust study found that the current generation —kids aged 6 to 17— spend nearly half as much of their time outside than their parents generation. A survey conducted in the U.K. found that just 27% of children said they regularly play outside their homes, compared to 71% of the baby boomer generation. This matches my experience over the last two decades. My family has lived in different neighborhoods across different states and the streets are always quiet, even on weekends. While some tend to think the shift is a result of kidnapping fears that have swept the globe —and there’s some truth to it— I can’t say that I fully agree with it being the main cause for the shift. When I was a kid the same fears existed which is why we were told to never take candy from a stranger, don’t hop into stranger’s cars, don’t talk to strangers and always travel in pairs. Parents used to go through Halloween bags to ensure candy was safe for their children out of an abundance of caution. When we all went out to play, everyone knew to be home or at least close to home when the streetlights went out. While I believe parents today are more fear driven and overprotective, the concern for child safety has always existed. What didn’t exist however, was a world where information technology is plugged into everyone’s lives and never shuts off. Because of this shift, there’s mounting concern that our brains are slowly changing for the worse —becoming atrophic.

If you’ve been keeping up with my work you’ll know that I’m a big believer in the idea of cosmic cyclical timing —the idea that time is not linear but is instead cyclical, repeating at particular points in order to reset the proverbial stage. To some the idea can seem a bit far-fetched. This is because we’ve been indoctrinated with the narrative of linear progression bolstered by macroevolutionary theory. The story that the human race started at 0 and will now progress towards infinity is seared deep into the minds of most people. But for years I’ve sensed that something is off in the world. I believe the continuum of infinite progress we live in is nothing more than an illusion. It is a fake reality that’s been meticulously curated by dark spiritual forces for the purposes of distraction. We’re all staring at the shiny object, worshipping our technology —Babel reborn. It is the very same technology that will usurp the human mind, causing us to yet again forget our past. You’re probably wondering how? I’ll try to explain.

With the current rise of artificial intelligence we are beginning to offload more of the brain’s cognitive functions. Such functions strengthen and maintain the brain’s ability to think critically and solve problems. Researchers are becoming concerned and have recently become more vocal about this potential danger. A National Library of Medicine opinion article released in 2024 concerning the impact of artificial-intelligence chatbots (AICs) on cognitive health had this to say:

AICICA (AIC Induced Cognitive Atrophy) refers to the potential deterioration of essential cognitive abilities resulting from an overreliance on AICs. In this context, CA (Cognitive Atrophy) signifies a decline in core cognitive skills, such as critical thinking, analytical acumen, and creativity, induced by the interactive and personalized nature of AICs interactions. The concept draws parallels with the 'use it or lose it' brain development principle (Shors et al., 2012), positing that excessive dependence on AICs without concurrent cultivation of fundamental cognitive skills may lead to underutilization and subsequent loss of cognitive abilities.

People may reduce their effort or shed responsibility while carrying out a task if an automated system performs the same function. It has been suggested that using technology convinces the human mind to hand over tasks and associated responsibilities to the system.7,8 This mental handover can reduce the vigilance people would typically demonstrate if carrying out those tasks independently.

I found this opinion article pretty fascinating but also incredibly worrisome. It delves into the topic of EMT (Extended Mind Theory) and describes how technology is used as an extension to our cognition. It’s pretty disturbing.

The EMT, proposed by Clark and Chalmers (1998), challenges_ the traditional boundaries of cognitive processes being confined within the human brain. According to the EMT, cognition extends beyond neural architecture and infiltrates into the tools we employ. Within this complex interplay, AICs assume a pivotal role, transforming from a mere artifact into an active contributor to our cognitive functioning. This symbiotic relationship between humans and AICs could promote cognitive offloading, a mechanism through which individuals utilize external aids to alleviate cognitive burdens. AICs facilitate this process; enabling individuals to delegate complicated cognitive tasks, supporting them navigate the complexities of modern life. Powerful AICs, such as Chat Generative Pre-training Transformer (ChatGPT), Google Bard, Bing Chat, Perplexity AI, equip users with remarkable abilities, empowering them to conquer complex problem-solving, generate creative outputs, and instantaneously access vast amounts of information (Dergaa et al., 2023). Just as excessive reliance on the Internet has produced unintended cognitive consequences (Grissinger, 2019), uncontrolled cognitive offloading through the utilization of AICs necessitates critical examination. However, the impact of AICs, based on their output and nature of interaction, may pose a substantially negative effect on cognitive health. Unlike general Internet use, AICs engage users in a more personalized, interactive manner, potentially leading to a deeper cognitive reliance.

The article suggests that AI Chatbots illicit an overreliance in the context of trust. Because chatbots mimic human intelligence people develop a tendency to trust what is being communicated, thereby deepening the personal connection to the technology. This deepened connection increases cognitive reliance and the result is more cognitive offloading. It becomes a vicious cycle and extremely concerning when we take into consideration this technology’s ability to get it wrong. One of the chief concerns when using LLMs (Large Language Models) such as ChatGPT is their propensity to hallucinate depending on the subject matter. When these Ai models hallucinate they essentially fabricate false information so caution must be exercised when using them. I do believe however, that the error or hallucination rates will decrease over time as the technology improves. But this will only increase our reliance.

I also came across an interesting article covering a scientific paper out of the University of Monterrey—that looks at the concern of Ai supplanting human cognition. The same fears are shared:

But Domínguez’s paper warns of the potential risks associated with integrating AI so closely into our cognitive processes. A key concern is “cognitive offloading,” where humans might become overly reliant on AI, leading to a decline in our ability to perform cognitive tasks independently. Just as muscles can weaken without exercise, cognitive skills can deteriorate if they’re not regularly used.

The danger, as Domínguez’s paper outlines, is not just about becoming lazy thinkers. There’s a more profound risk that our cognitive development and problem-solving abilities could be stunted. Over time, this could lead to a society where critical thinking and creativity are in short supply, as people become accustomed to letting AI do the heavy lifting.

“Many people argue that there have been other technologies that allowed for cognitive offloading, such as calculators, computers, and more recently, Google search,” Domínguez explained. “However, even then, these technologies did not solve the problem for you; they assisted with part of the problem and/or provided information that you had to integrate into a plan or decision-making process.

“With ChatGPT, we encounter a tool that (1) is accessible to everyone for free (global impact) and (2) is capable of planning and making decisions on your behalf. ChatGPT represents a logarithmic amplifier of cognitive offloading compared to the classical technologies previously available.”

This is quite problematic when we think about how the brain works. If you’ve been around long enough then you undoubtedly have participated in cognitive offloading. As a species we are slowly handing over cognitive tasks to AI as we become more dependent on it. There are many people that are extremely wary of AI and as someone who has spent over 20 years in tech, I believe it’s warranted.

I recently watched a clip of Vice President JD Vance, where he spoke at the Paris AI Summit in France. He extolled what he believes are future opportunities that AI will provide while stating that fears and excessive regulation around the technology will work against human “progress”. As much as I like JD Vance, and again as someone who has spent two decades working in tech, I believe he couldn’t be more wrong.

AI will replace a lot of jobs, period. When we talk about artificial intelligence we’re not talking about the creation of more dumb machines on an assembly line and faster rockets. AI doesn’t mimic physical tasking. It mimics cognitive tasking. Integrating an artificial intelligence layer within our world will drastically change how we live and interact as a human species, not just how we work. Why? Because we will no longer solely interact with just each other. Our dependence on AI and its decisioning will increase as well as our trust. I believe this will lead to the development of human relationships with AI and a deeper dependence on these relationships. A dependence on AI which can cognitively outperform a human will mean deeper relationships with AI instead of humans.

I don’t subscribe to the idea that humans will become more productive due to AI. In fact, I believe all we’ve done since the dawn of time is shift energy production around. Sure, those living in a developed world no longer need to farm all day or hunt for food. Those activities demanded a certain level of energy expenditure to keep society in motion. But we’ve simply shifted all of that energy into commuting through smog filled cities only to sit at a desk as a slave to technology. We’ve transformed the way that we live by transferring human energy production into what we’ve built, with the hope that our technology would alleviate the need for human energy to keep society in motion. But I’d argue the energy still exists and we’re no more productive than we have been in the past. We’re simply doing different things —less physically demanding things— that have been disastrous to the human race.

We spend less time with our families, we incur more stress, we spend less time operating in our natural environment and we’ve polluted and damaged the earth for productivity sake. The energy we once produced is now offloaded and transformed for our technology to use and this has come at a cost. I would argue that as a species we are busier than ever as we attempt to juggle a million things at once, but we are far less productive and more stressed. I measure productivity based on outcomes, not outputs, as should everyone. However, we unfortunately live in a time where people have confused outputs created by our technology as equal to more or better outcomes.

If humans were batteries —which I’m hoping you’re seeing that we are acting as such— then artificial intelligence is coming to siphon off the last bit of our energy which is our cognition. As the brain faces atrophy, we will rely on AI to fill in the gap. AI will drain every last bit of our cognitive energy as we continue to offload human energy to be stored and used by our technology. I don’t see how a society can become more productive while facing brain atrophy. I do see how our technology and more specifically our machines can give us the appearance of being more productive. It’s because we’re still dealing with outputs only.

I’ve stated several times before that humans are not living according to God’s decree. And if humans aren’t living according to God’s decree —laid out in Genesis 1:26— then that means man will be pursuing what he’s not meant to pursue. This can only end in disaster just like the Tower of Babel event. There is no other option. When something is designed for a specific purpose and is able to fulfill its purpose the result will be harmony and order. To the contrary, when whatever has been designed rejects or fails to fulfill its purpose the result becomes chaos. There’s a necessary chaos and order balance that is built into the cosmos with an abundance of examples for when chaos strikes; a guard dog that fails to guard during an attack on its owner; a car that fails to start; a screw that fails to secure; soap that doesn’t clean; food that doesn’t satiate; medicine that fails to heal; an alarm clock that fails to go off; a bridge that buckles under the weight of cars; a dam that fails to hold back a river. All of this leads to chaos. Human beings failing to fulfill their purpose is no different. The only question left to answer is, when will chaos strike humanity again? And with an overreliance on our machines coupled with our inability to think critically about our world, in the future who will be left to remember what once was?

As I wrap up here, I want to share with you some things that I am doing to better protect my mind. Feel free to implement these tips and add others in the comment section.

Work on your spacial awareness: This can be done by playing video games with large maps a character must traverse, driving around without navigation in unfamiliar areas, or hiking.

Work on your problem solving abilities: This includes completing puzzles and math problems.

Improve neural connections: This can be done by taking time out of your day to learn something new. Improving neural connections will help strengthen problem solving abilities, memorization, and critical thinking skills.

Be wary of cognitive offloading: When you use AI attempt to minimize cognitive offloading as much as possible. Remember to always be the “brain” behind AI.

As I stated earlier, I’ve spent quite some time in and around technology. Not just as a user but as a developer and engineer. I’m a big believer in leveraging technology in ways that can augment human tasks but with the caveat that it enables us to be better stewards of the Earth, not simply make bigger toys which encourage laziness. Yet, I’m also incredibly cautious because I understand just how dangerous AI can be. I think it’s important to remember that technology itself can be neutral, but it is built by humans hands that often have certain agendas, biases and motivations. AI will undoubtedly reflect the characteristics of its master. I see a potential future where ambition and hubris create a world with humans enslaved to their very own technology. I see this ending in disaster with a reset of the species and planet becoming necessary to restore natural order —the defeat of chaos. This may give rise to a future civilization completely unaware of the mistakes made in the past —only to eventually repeat the same cycle as they chase human progress.

But hey, maybe I’m just tripp’n.

Thank you for reading. If you enjoyed it, please restack and share it! Feel free to leave comments as I’d love to hear your thoughts.

How you can support my work

🤗 Share my articles far and wide so we can help other humans.

😎Leave a comment so the Substack algorithm thinks I’m more important and popular than I really am.

I have memory like a damn elephant Terrance. I have described it being both a blessing and a curse. This article got my attention as I have noticed some drop off. Have to crawl back thru this later when I have more time. Very interesting subject matter sir.

This is the exact reason I caution people against using AI in any capacity. Not to say that, in small doses, it's inherently harmful, but I know an increasing amount of people both personally and professionally that have begun to use ChatGPT to draft emails - mostly formal, work-related emails that they struggle to word in a professional manner. I've been tempted to do the same, but the thought occurred to me that once you begin to "offload" that task on AI, you'll lose that skill over time. It isn't as if using ChatGPT to write one email will rob you of the ability to write a work email, but it will make you more inclined to use it again in the future, which begins a feedback loop which will ultimately result in one's dependency on the program to offload more and more. I know others who use it to edit their works as a sort of spell/grammar check, which I can't help but imagine will attrite their ability to manually edit their works over time as well. The convenience offered by these programs is great, but is it worth losing those skills? Doubtful.